Tech Buzzwords What Destroyed Our Industry

words have meaning. meanings have power. power can be shared or power can be stolen. stolen power means power accumulated. accumulation in fewer and fewer entities leads to centralization of economic power. power is limited. conservation of power.

since words have meanings and meanings have power, the purpose of words change depending on social or cultural context. Maybe you hear someone say “i have the cripples” and you run around screaming “you can’t say that!” but they are from a different culture and actually just meant they have a sports injury. Maybe you listen to genres of socio-cultural music where certain words are popular for expression but forbidden for your own casual usage. Words and meanings vary based on context and intent and source and compassion.

so what about words and meanings in tech spheres? let’s break down: tech buzzwords

tech buzzwords

tech buzzwords are defined by a single conflict:

- terms with very narrow direct technical meanings

- terms gaining popularity outside of their direct technical meanings usually by media/executives/smoothbrains

(tech buzzwords are different from marketing buzzwords: a marketing buzzword is something completely meaningless with only ego and advertising powering its spread, while tech buzzwords start from concrete technical meanings then get diluted over time as more people try to use words for manipulation or social status.)

thus the tech buzzword discontinuity is born: very specific technical terms used by a minority of knowledgeable experts spreads too widely, and terms having specific technical terminology becomes majority spread by people not having any capacity or capability to understand full meaning of words/concepts/trends they attempt to execute.

such discontinuity spreads via “thought leaders” consumed by pure abstract sound generation. they communicate in terms they don’t understand, but they know these sounds have strong (and perhaps profitable) meanings for other people, so if they can just imitate the sounds without needing any education or understanding or experience, they can continue to act as “executives” and maintain their own persona social power structure with declining actual relevance in the world. as a recent example: every executive who derides modern AI language models being unusable because they are just “stochastic parrots” after reading a NYT article from 2022 for 5 minutes needs to only look in a mirror to find the real chicken in the room.

we can all probably think up dozens of buzzwords both currently active and historically active (as with all tech fads, popular highfalutin status-shaped terminology tends to decay over time), but what are the buzzwords meeting criteria of being both widely used and heavily damaging to the tech industry as a whole? stay tuned to find out.

words of the buzzing persuasion

first off, the worst terminology responsible for the destruction of 5 generations of programmers and entire economies so far: free software / open source.

free software / open source (same thing)

The terms “Free Software” and “Open Source” have been responsible for widespread economic value destruction and widespread economic profit concentration over the past 30 years at the expense of transferring value from creators to economic manipulators.

Look at the absolutely lefucked definition from wikipedia:

For other uses, see Free software (disambiguation). Not to be confused with Freeware.

Free software, libre software, or libreware[1][2] is computer software distributed under terms that allow users to run the software for any purpose as well as to study, change, and distribute it and any adapted versions.[3][4][5][6] Free software is a matter of liberty, not price; all users are legally free to do what they want with their copies of a free software (including profiting from them) regardless of how much is paid to obtain the program.[7][2] Computer programs are deemed “free” if they give end-users (not just the developer) ultimate control over the software and, subsequently, over their devices.[5][8]

A recurring trend in the buzzword problem of words-vs-meanings: low information people (executives/media) use literal words or phrasing as their only reality anchor, then just hallucinate ungrounded secondary meanings from a lack of technical experience because they can’t be bothered to “learn” new “things” outside of their own day-to-day auto-generated life history.

Here, we see “free software” is already an absolute disaster. One phrase with potentially 5 different primary ways to express it, then the second sentence of the definition (which all the low information manager/executives will never bother understanding) says “free” isn’t “free” as in “price,” it’s “free” as in libre as in libre!!! LIBERTY FOR ALL! LIBRE LIBRE LIBRE!

literally nobody outside of 12 hypernerds cares about engaging in 5 hour long debates about “free” vs “freedom” vs “libre” vs “liberty” vs “openoffice” vs “libreoffice.” The nerds surrendered control of society by “um, but akshually the meaning is…” from day 1 because nobody with power or authority cares about understanding “deeper meanings” of poorly thought through hippy buzzwords, so “free as in freedom” turned into “free as in you make it then I sell it and keep all the profit from selling zero-cost-of-input goods under capitalism.”

All managers see is “free” == “never gotta pay for it” and the world moves on. You can’t inject definitions into people’s minds when they already have motivation to believe a worse definition they can imagine unlimited exploitation or profit against (also a recurring theme in buzzwords: manipulation or false extrapolation of a term for profit over actual usage of technical meaning).

maybe it was a bad idea letting own-nothing-and-never-earn-money-because-we-grew-up-in-a-hippy-commune cult members establish the groundwork for all computing terminology in what grew into our modern multi-trillion-dollar industries.

“You see Free Software has been so successful because we have shown we can develop software without any money.”

It’s a shared failure of our entire industry to not reel in these poor definitions and ambitions before the default mode of software development became: individuals use their personal time and years of life experience to make reusable software available with no conditions, then corporations discover thousands of pre-written libraries and services written under the guise of “free software” can be used as acceleration factors to generate billions of dollars in private extractive profit without ever paying for the inputs to the company. Instead of paying resource creators to build the world, you steal everybody’s work then hire your own private 6-month-old API glue developers to just endlessly recombine public work into private solutions. Corporations using “free software” to generate profit without paying creators is on par with any zero-cost-oh-look-it-just-appeared resource extraction like trafficking wildlife or natural resource fencing.

one argument against paying software creators is “but software is used everywhere! it’s impossible to even track who created what!” meanwhile, the hollywood-riaa-industrial-complex, powered by 80,000 lawyers who all hate each other, legally requires every second of broadcast audio be classified with an owner of who to pay for audio royalties no matter when any media is broadcast anywhere in the world. If they figured such a system out 60 years ago, there’s no excuse for companies not paying open source developers today except — gasp! — feigning economic ignorance in furtherance of their own private profit accumulation. it’s impossible to pay you because it would require stuff like math and databases so clearly it’s impossible! now go back to generating 100,000% more value than we’re compensating you for!

it’s also estimated the Jeopardy game show theme song, written by one person in 30 seconds 40 years ago, has earned him over $70 million in replay royalties because he gets paid every time the song airs in any jeopardy episode any time of day in any country anywhere in the world. sure, hollywood audio media can manage this half-century centrally managed giant passive wealth accumulation hack, but when I spend 10,000 hours writing software and it gets deployed to a million servers for free, suddenly “nobody can track it” and “we don’t have to pay you” and “get out of my dining room or i’m calling the cops again.”

note: we are intentionally ignoring the history of things like tivoization, static vs dynamic linking, ibm/sco, gpl-vs-lgpl-vs-open-source, statically-link-but-communicate-over-pipes-to-avoid-bundling, sass-wrap-source-so-licenses-never-apply, aws-ification-of-stealing-experience-for-private-profit-hosting, …

why this is personally annoying: i’ve written software in the past. software i’ve written has been hosted and sold “as a service” by aws, gcp, azure, others, for maybe ten years now (and obviously i’m not the only one this happens to at an individual level). they make yearly profits off my software, but they never contribute anything back. the last time I tried to interview at some of those places, I think they said I was only qualified to be a “junior load balancer support engineer on call 3 weeks per month” so… why bother doing anything? i’m so glad i’ve spent years of my life writing software to subsidize trillion dollar companies and yet i still can’t afford a place to live since rent goes up 7% per year but my comp hasn’t grown in 10 years. but, joke’s on them because i’ve written even better server software, but i haven’t released it so they can’t steal it, but also they will never pay for software, so we’re at a fun impasse where i write best-in-the-world services and just never release them. monop gonna monop i guess.

and we’re walking… — now, it is time for destructive extractive buzzword the second:

devops (sic)

you got your dev in my ops! no, you got your ops in my dev!

what the devops buzzword means: a process for sharing production release responsibility between teams.

what managers and executives hear: “dev” and “ops” as one word? “devops?” Now we get to fire our entire admin/operations/infrastructure team because now DEV IS OPS! DEV IS OPS! DEVOPS == DEVS DO OPS! SO WE DO NOT HIRE DIRTY EXPERT INFRASTRUCTURE PEOPLE EVER AGAIN! SUCH COST SAVINGS!

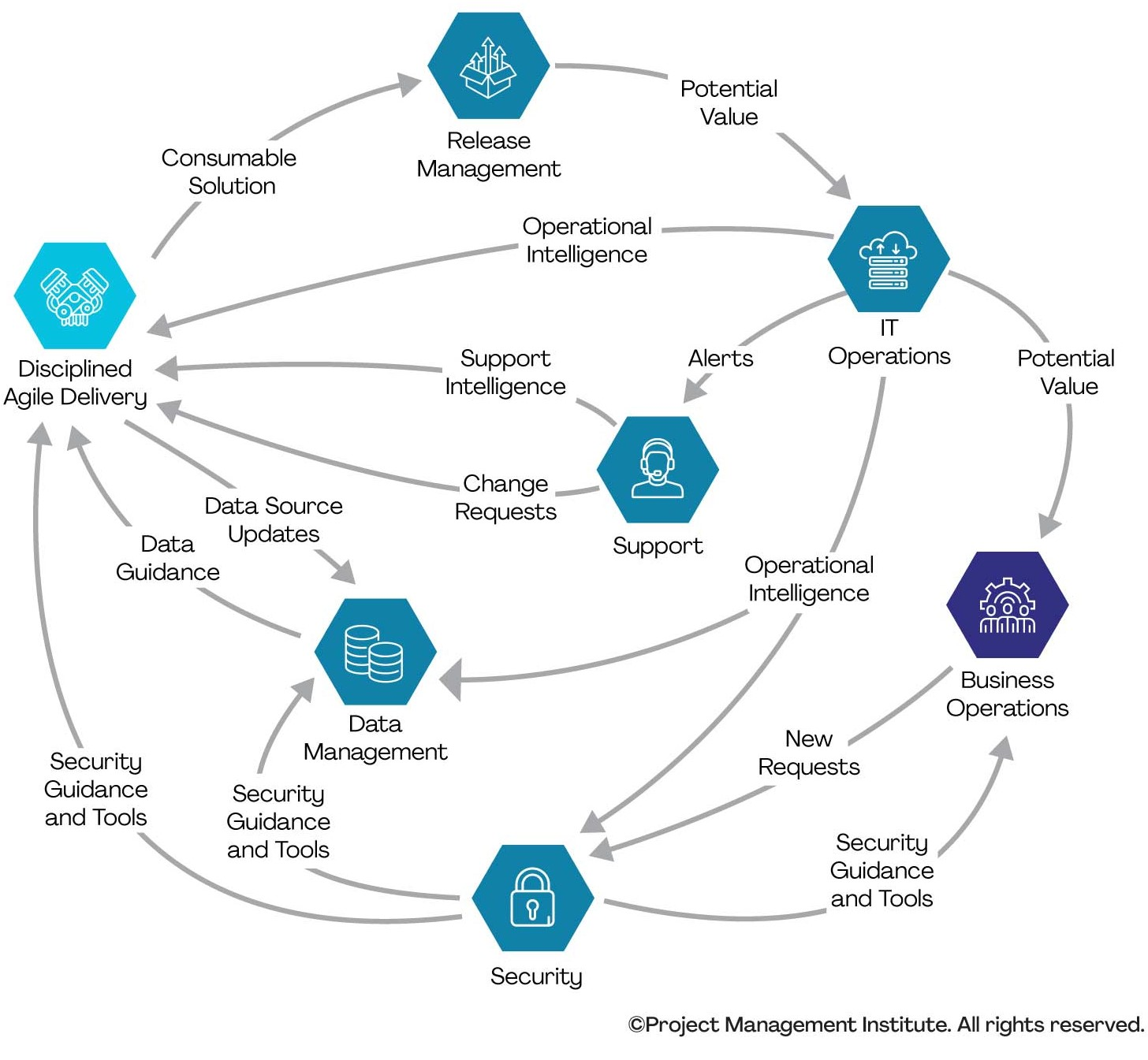

so, what is devops? there is the standard “devops diagram”, which is just a degenerate form of “ITIL for normies,” but it truly is the only meaning of devops: devops is just a process for team integration.

Under no circumstance does “devops” mean “dev does ops so we fire all our ops!” — except, you can imagine to managers and executives, it’s much more appealing to think “this industry-wide buzzword all my other executive friends are so hype about means I can FIRE HALF MY TEAM! YES! DEATH TO NON-AGILE-NON-PRODUCT-FEATURE HEADCOUNT!”

all while ignoring, now your developers have 20% to 50% reduced productivity because instead of having dedicated admin/ops/infrastructure professionals with experience and focus on long-term vision for technical solutions, you just refuse to have employees dedicated to infrastructure and scalability and security and compliance. Now your remaining employees are scattered as “trial and error” or “practice by failure” perpetual infrastructure novices trying to manage 50 different AWS products they don’t understand every day. congrats, you saved $1 million per year by refusing to have infrastructure management/reliability/networking/storage/configuration/operating system/scalability/compliance/security/process-management/product-review headcount, but instead you are now costing your company $5 million per year in lost productivity due to offloading all those extra company requirements on to your “precious saas api copy/paste glue developers.” amazing expert executive judgment there, you’ll be on the cover of time magazine any day now.

of course, you may say, we respect devops because we have a dedicated devops department! which is also quite bunk. devops is a process between departments so having a devops department is like having an agile department — it doesn’t make sense. you just renamed traditional operations and infrastructure people as “devops” because: trendy buzzwords. companies with devops departments also vary wildly between “Devops just runs our CI/CI” to “devops is ops here but we don’t call it ops or SRE (‘service restart engineer’ or ‘sysadmin really expensive,’ take your pick) because buzzwords.”

if there’s any constant in the tech industry, it’s the future is built by people who half understand the past and fill in their misunderstanding gaps with outright hallucinations as they grow bigger.

why the guttage of actual productive systems management/infrastructure/ops departments though? I think it comes back to hierarchy of decision making. How do you hire a lawyer if you have no experience being a lawyer yourself to judge who is a good lawyer? or good medical doctor? or good entomologist? One approach: fear; just don’t do it! Avoid engaging experts if you don’t need them (but you don’t have the experience to know you don’t need them either). This largely why the “devops movement” destroyed infrastructure departments across the industry: you can’t hire something you don’t understand, and infrastructure work is very specialized and very experience-based. You can’t leetcode your way through an infrastructure interview because abstract solutions are irrelevant, and rando startup CEOs or “software engineering managers” have no experience in infrastructure to evaluate actually qualified or exceptional candidates, so… you just don’t do it. Let your other employees fake your infrastructure using overbilled AWS services forever to avoid needing to extend your own managerial competence (because employees are usually really good at one thing: never admitting when they are just making it up and hoping nobody else notices, which can go on for years or decades in some companies, so you never realize you are missing entire departments at your company causing everything to always be in slow motion mid-collapse mode!).

double of course, the second unofficial hallucinated definition of devops is: “ops are devs” meaning your infrastructure people must be full theory-of-computation developers, which traditionally hasn’t always been the case. historically, many “admins” or “ops” followed a different life path of maybe being promoted out of tech support or dropping out of community college to “run computers,” then “run datacenters” then “run services running in datacenters,” because the job was mostly using vendor products and knowing vendor products with glue scripts here and there but maybe not total automation in the middle. this was very much a more historically traditional tradesperson / apprenticeship capable life path where you become an expert from “learning by doing” in focused areas over time. but the second unofficial hallucinated definition of devops says “ops is now code and code is developers so all infrastructure people must pass a platinum level l33tc0de check before we trust them” which is a clever form of class warfare by trying to rugpull the livelihood of a million productive knowledgeable professionals because now the entry criteria of “our interview process requires you write code to discover a hamiltonian cycle in these graphs on a whiteboard in 7 minutes” has nothing to do with actual implementation or experience or the job description of maintaining infrastructure and service excellence over time.

(we’re seeing something similar to the “devops is ops must be computational theorist programmers” false hiring restrictions in AI things now too… all the AI companies are 100% focused on hiring 28 year old PhDs from stanford and refuse to entertain anybody from any different background… it feels like some sort of “elite value capture” or class-based tech hiring warfare or something. outcomes undetermined, but i’d rather bet the AI future on practical implementers with physical machine and systems experience than trust weird in-group-only theorists who think the rest of the world is so dirty they must be protected from ever working with anybody who hasn’t wasted their life also getting a phd (made up) in computer science (also made up), or worse, alignment (super made up))

what’s hilarious is the world has become computationally inverted. most startups would be better off just buying $100k of server hardware up front these days and they would be set for years instead of paying AWS $500k/year for 10 years on bloated services nobody really needs. Constraints breed creativity and efficiency, but just saying “here’s AWS, you can deploy 3 or 3,000 servers any time you want for anything” is the worst thing you can do (say lambda api gateway one more time i f’ing dare you) when your entire company is populated with infrastructure novices in the first place (the last company I helped with AWS problems created their entire company by “just click to deploy RDS” servers, except they did it 5 years ago and never looked again, so the single DB for their entire company had grown to 5 TB and had been hosted on a deprecated legacy single core DB instance with 16 GB RAM and slow storage and slow networking running at 99% CPU and 99% memory usage continuously for 3 years and they never noticed because they were incompetent with infrastructure (after I fixed it all, their analytics pipeline went from taking 60 hours to only 30 minutes because of course they were running long-term analytics on their live customer database too) but hey, it’s a cloud native saas-first devops world so think all the money they saved by never hiring anybody with experience (plus all the lost customers because the app was just continuously slow)).

why this is personally annoying: i tend to prefer infrastructure work because it’s more portable, except all the infrastructure jobs are gone now due to AWS monopolies and companies de-skilling their workforce as “Devops cost savings because devs does ops!!!” — would you rather your resume say “i spent 8 years working on the MEEBO TOOLBAR” which doesn’t exist anymore and never mattered, or say “i help build out and maintain a 90,000 server infrastructure platform with automated monitoring automated failure detection automated recovery and self-service deployment portals for developers?” After working for your 5th startup with a failed product due to failed management and having an endless sea of dead companies on your resume, at least your infrastructure experience is endlessly reusable and recombinable in any number of future employment scenarios. Nobody can take away your experience of building out high performance networks and database systems and caching systems and backup systems and alerting and monitoring and performance tracing logic, but anybody can take away the 300th “perfect customer-facing SaaS dashboard” you built inside a dead company nobody cares about anymore.

ops / infrastructure / cloud rant

you can configure a single server with 128 ARM cores running at 3 GHz (full cores not fake threads) and 4 TB RAM for under $20k USD as of this writing, then you can add an additional 30 TB of combined NVMe and SSD storage for another $5k. So, for around $25,000 one time cost, you can outperform basically $300,000 of AWS spend per year. But you’re “afraid of servers” so you would rather pay AWS $500,000 per year instead of spending $50k once to buy two servers (because you think owning one server suddenly costs you $5 million per month in headcount to “manage the server”). gotta love our modern landscape of computational ignorance from all corners of the world.

people truly do not understand the power of modern hardware. Every trendy VC SaaS is running around renting AWS compute like we never left 1970s time sharing systems because VCs are stuck in a 1990s mindset where “a nice server” is some Sun beast costing 1 million dollars plus another 1 million dollars for an oracle license plus an extra 1 million dollars per year for required support contracts. “wow, instead of giving Sun and Oracle $3 million per year, we can just pay AWS $500k per year! BY GRABTHARS HAMMER WHAT A SAVINGS!” madness. time marches on.

these are things you could realize if you had actual infrastructure competency instead of repurposing 24 year old javascript-only developers to “do devops” and they just end up building yet another $100k/year AWS microservice solution across 900 deployed containers which would have worked faster and cheaper as a single service on a single server for less than $200 per year (gotta love those “12 factor app” brainworms).

but now, yes, it is finally time for buzzword the third, but, what is this? there’s a tie? the third buzzword is a bonus round!

generative ai / full stack developer / data science

let’s get into some weird stuff here.

again, the goal here is buzzwords with technical meaning but broken casual meaning (none of this is about “empty buzzwords” where the purpose was to always be vague and meaningless just for thought leaders to do their thing like they did with: big data, push technology, microservices, cloud computing, IoT — or more disastrous modern things like “revops” for “revenue ops”???? or “DevSecMLGDPRCompliaceSOC2VisionProOps”…)

generative ai

buzzword du jour especially since you can really stretch it out into lots of syllables: gen-er-at-ive ar-ti-fi-cial in-tel-li-gence and we all know more syllables makes you sound more smarter. you can see the sly smile everytime some media personality uses the phrase without knowing what it means because they think just by invoking the technowords they are one of the cool kids now.

traditional ai is of the garbage-in-garbage-out variety, except now generative ai (praise be) is of the garbage-in-very-pretty-garbage-out variety. yes, useful. yes, it will destroy the world. no, just because you know the name of it doesn’t mean you understand anything about it or its implications or its use cases or when or when not to use it.

generative ai is a fun out-group buzzword because the only reason to say “GENERATIVE AI” is if you are attempting either a marketing manipulation play or a political regulation play. It’s not specific enough to be a usable technical term and it’s vague enough where everybody can just smile and nod when it’s used casually to move a sentence along.

really want to have your mind blown: any old school ML classification is also generative because it generates classifications — how’s that for soup, huh?

but clearly by generative ai they actually mean: our 5th generation causal models phylogenetically derived from energy based models now highly parameterized and capable of generating human-level-or-better output at human-level-time-scales, but such verbiage isn’t conducive to economic or political manipulation.

full stack developer

FSD coming very soon now.

Nobody knows what “full stack developer” means. The original joke after “full stack developer” became a tech term was “lol i’m full staack because i do javascript on the client and on the server” — yet, somehow, he returned dual-wielding javascript became the actual and only meaning of the phrase!

Of all the things a “stack” entails, our entire industry has collectively decided the totality of human technology is encompassed wholly within javascript servers and javascript browsers.

There’s no actual term for the traditional role of “web developer” or “application developer” where you configured and managed and optimized everything from operating systems to database servers to DNS to filesystems to distributed user management to CDNs to local networking to ISP connections to application servers to front-ends all by yourself or with a small team of also highly competent people. Such a thing can’t even be conceived of by tech minds these days! How far we’ve fallen.

sure, i’m a full stack developer because I can run npm on a server and I can upload the results to a client. If only Turing could see us now.

maybe one day “full stack developer” will change to mean “WASM on the server and WASM on the client” instead, but I don’t see the industry restoring the proper respect and reverence for BOFH everywhere as we deserve.

look at this madness — literally a 10+ year process to learn all these aspects competently, but sure, you are the smartest human ever to live so you can speedrun a lifetime of experience in half a year then bounce to a $300k/year job just to be a burden to other employees who actually know what they are doing (though, it’s not any individual persons fault they are born into such a broken system this is what they are told to aspire to in the first place and they don’t have context to know any better):

also lol people really don’t understanding “using a tool” has nothing to do with “knowing what you are doing” — where is the 5 years of algorithms and 5 years of understanding operating systems and networking and metrics generation and consumption and alert management and logging generation and consumption and 5 years of understanding business models and and 5 years implementing your own programming language virtual machines and 5 years of understanding datacenter-as-a-service deployment platforms and 5 years of learning design and typography and 5 years learning service automation and failure scenarios (without mentioning: never-ending years learning underlying APIs for every tool and library constantly changing, but you have to get up to speed with the past and current state of the art, and web servers and security (network) and security (compliance) and security (os) and security (app) and and and?) — basically, nobody wants to go through the “developer slug stage” anymore. Everybody wants to hard launch out of “learn python in 24 hours” directly into pretending they have 30 years of experience with compensation to match. Sorry new devs, you have to slug before you can senior.

I think my guttural problem with “full stack” weenies is, in my mind, if you call yourself “full stack” you are proclaiming to be an utter top-of-the-industry expert in everything from low level terabit networking all the way up to debugging CSS layout problems and DNS problems and storage device problems and business models around billing practices and marketing and non-delusional TAM calculation and human-centered design practices and a dozen other things. Having an entire job title with a technical meaning of “i am an expert in everything” but a practical meaning of “lol javascript in TWO places?!?!?!” is just wrong and everybody should stop. It also dilutes actual lifetimes of experience because how do experts say they are experts when novices with 6 months of ADHD practice are running around saying they know everything about everything?

takeaway: “full stack” developers only skilled in “surface-level app delivery” is like calling yourself a “full body doctor” as an esthetician.

data science

the four horsemen of the computational apocalypse were formed around 2010 when all these terms started trickling out of thought leaders everywhere: devops, full stack, data science, and maybe data analytics.

here’s a good example of how far we’ve fallen: I literally do not understand what anything means in this thread — the other danger of buzzwords: domination of terminology by the know-nothings generates such a linguistic drift only novices know the future. So, sure, trickle out continually diluted broken terminology into people who don’t have a complete understanding of environments. Now people begin treating their own watered down passively consumed terminology as gospel instead of previous technical shorthand, then baseless meaningless terms become concrete and proliferate tragically. we end up with people trying to plan their lives around made up terms on dead-end career tracks all because google thought leaders wanted to give presentations at oreilly conferences in 2010.

but, back to data science, or datasyenzzence as the cool kids say.

what is data science? i have no idea. let’s ask google and click on the first result:

Data science is the study of data to extract meaningful insights for business. It is a multidisciplinary approach that combines principles and practices from the fields of mathematics, statistics, artificial intelligence, and computer engineering to analyze large amounts of data. This analysis helps data scientists to ask and answer questions like what happened, why it happened, what will happen, and what can be done with the results.

Such an interesting definition versus the reality I’ve experienced over the past 10 years or so across multiple companies. In practice, I see organizations usually deploy the “data scientist” role in the wrong places and then those roles end up being huge road blocks towards productivity (unless “data science” is just attached to some 100% isolated “analytics” department island as overpaid report generators).

If I had to define “data science” from the real world, it would be more like: a person who doesn’t want to be a programmer, but they want to generate answers from data, so they use Excel and R using an academic-but-not-quite-practical understanding of data manipulation to generate “numbers the executives like to see,” then, if the numbers are useful enough, everything gets re-implemented by “real developers” using more scalable practices. I’ve never seen a “data scientist” who couldn’t be replaced by a more productive “real developer” with time to focus on an actual data problem and solution. oh, you ran a t-test? LET ME CALL THE NOBEL COMMITTEE, no wait, the other thing, let me call a 19 year old who just took an undergrad intro to stats course, or, more likely, let me just load up a language model code interpreter and have it do more useful work in 3 minutes than “data scientists” output in a week.

data science seems to be the gateway for people who “want to make computer programmer money” but “don’t want to be programmers” so we invented this entire imaginary field of “process data, but kinda ineptly, and offload the real work onto other people once an initial data extraction trial is completed.” It’s all a game of seeing who can load the largest CSV into excel before their laptop explodes.

one of the first jobs AI will eat completely is marginally competent multi-paradigm employees and it can’t happen soon enough. (one like on this post equals a one day sooner acceleration of the ai apocalypse!)

Conclusion

words. just say no to using words. everything should be communicated in meaning vectors of 768 dimensions or greater.

class traitors. just say no to encouraging class traitors advocating for sending all your money to AWS instead of hiring competent people yourself. there are countless tech-ignorant pro-cloud anti-worker threads online where “programmers with no infrastructure experience” continue repeating it costs $10 million per month in employee costs to deploy one server so, obviously, you have to give all your money to Amazon instead. arguments from computational ignorance are also arguments from being class traitors because your pro-cloud, anti-worker thought patterns are removing humans from employment and just giving their compensation to Amazon instead. There is no “cloud vs on-prem” argument because the argument is actually “pay amazon or pay humans” and every time you side with “pro-cloud” you are inherently being “pro-monopoly, pro-trillion-dollar-corporate-centralization and anti-individual-human.” (of course there are probably cloud agitprop nerds who argue centralizing all money globally in one corporation improves resources allocation, so we should all aspire to give all our money to amazon thus ensuring the most efficient use of cash for all time, but this is also irrelevant if amazon either won’t hire you or hires only at oppressive sub-surplus-clearing rates)

be pro-human, but pro-good-human, and be anti-monopoly, unless you own the monopoly, but don’t be a rube advocating for monopolies you don’t own getting bigger because you are too afraid to make your own decisions. nobody got fired for buying IBM, but everybody should be fired for choosing AWS and GCP and Azure over hiring your own employees and creating your own platforms. “but we can’t afford our own platform because AWS is so expensive and our company already doesn’t have enough money!” — okay, but what if the cause of your failure is your assumption AWS is good in the first place? what if you don’t realize you can cut your “cloud spend” from $5 million per 5 years to running your own hardware for $200k per 5 years instead?

it’s almost like the entire planet forgot compute and storage and networking gets cheaper by the year. everybody just agreed to lock in 2010-era compute prices as the fair price for compute and storage, so you will always pay the same hosting rates while provider costs continue to decrease exponentially forever. what a savings!

to conclude, i guess remember overall white collar crime isn’t illegal if you don’t leave a paper trail and your conspiracy has no defectors.

a great start to PMA 2024