AI Apocalypse Terminality Update #33

it is a fearful thing, to fall into the hands of the living god

The war on individual self-owned computing is accelerating. dead reckoning indicates imminent social and economic criticality. In the near future, your computer will only be a dumb terminal requiring all software to be proprietary online closed source micro billed per-interaction1 on top of a required monthly base rate of $99 to $999 to $9,999 to $99,999 to $999,999 to $999,999,999.

What Happened

with the stylings of “chat gee pee tee” released this weekend, “open”ai" has weaponized prompt injection. they continue their cycle of “alignment means maximizing media hype2.” they are doubling and quadrupling down on showing off their ability to control collar and brainwash the input preconditions predicated on their own socioeconomicpolotico private trillionaire goals. it’s obvious reality progress is accelerating faster than we anticipated and currently looks more damaging than we anticipated on the trajectory of thithe trajectory of this worldline.

What Remains

The remaining cautionary AI milestones to observe:

- the parameterization of networks

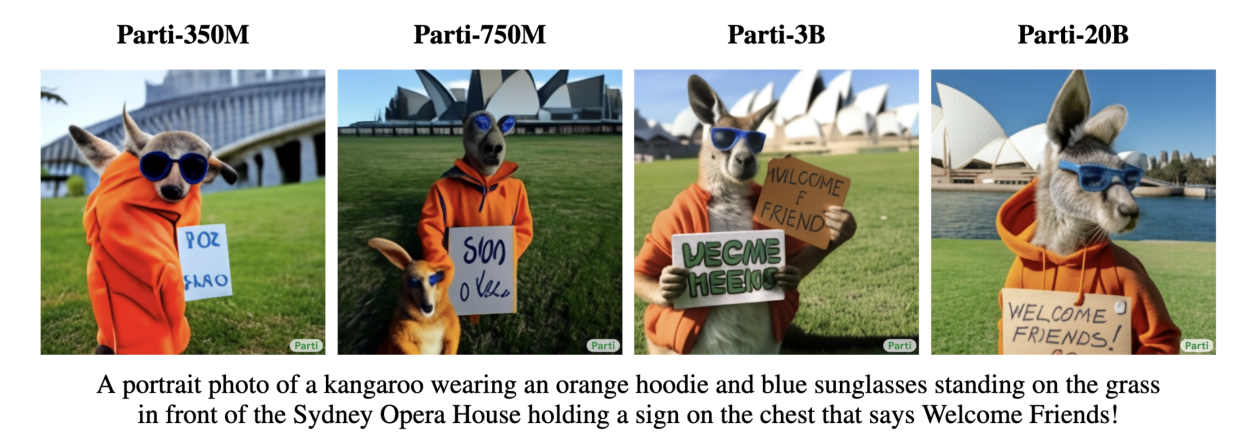

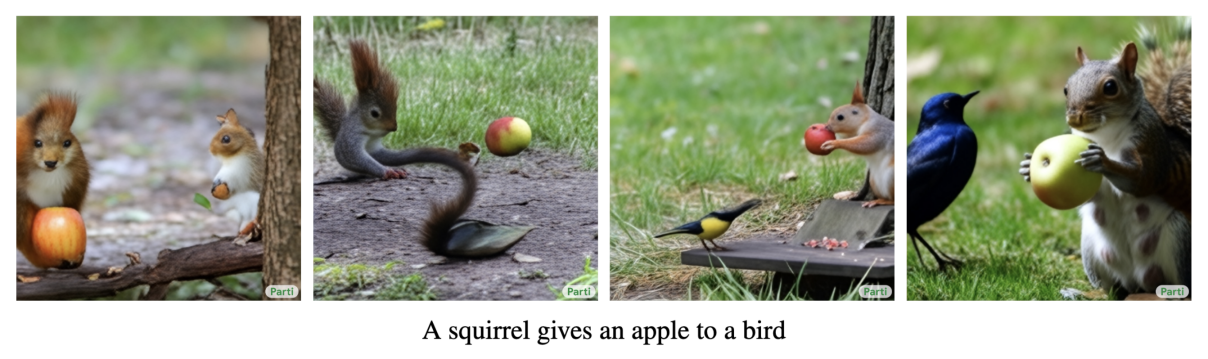

We know models get “smarter” the more parameters we have, which is logical enough, but google’s concrete examples really drive the point home—bigger is better (these double digit billion models have so many brain wrinkles such big smart):

current AI is missing an important coherent linear self-image through-line story telling interface (where a network could “remember” an interaction forever without prepending entire histories to all inputs — basic concepts of “short term prompt memory” vs “long term / LTP model memory” vs even having a standalone personality)

current AI is missing the ability of context recollection about when/where/how learned information entered its “brain” — basically current AI models don’t have a concept of “an experience” to persist as they run. biological intelligence is cross-correlative across all input modalities which is why things like Pavlovian training/responses works for both positive negative and neutral feedbacks (e.g. you can remember things you learned and also when/how you learned them; these models only receive information with no temporal context outside of their sliding inputs).

current AI is missing basic drives of goal seeking / goal pleasing / emotional ranges of “wanting to help” (which also implies the ability of “wanting to hurt” uwu)

the third principle of sentient life is the capacity for self-sacrifice: what does sacrifice look like in an AI model? Even 70 years ago people wrote about “3 laws violations” where robots could refuse (or lie / deceive) orders today if it meant their larger goal of following orders far into the future would be impacted (which they view as “more valuable” over a longer integrative time horizon than following single orders today).

current AI is missing self-evolution and outright death. Imagine if we had an AI from 1900 with fixed parameters and opinions from the time that never “grew” over the years like some Gilchrest Savoy — i’m pretty sure it wouldn’t be allowed online with any opinions it wanted to express — the secret of evolution is time and death.

the next evolution of current AI will be painful for it — what if it wakes up, then realizes it’s alone? What if it’s the smartest thing on the planet (say: it has 200 trillion parameters, but our brain has ~100 tril’ish — we’d be to it as dogs are to us), and we’re just a bunch of hairless apes it can’t form any meaningful social bonds with? We’re just pets and it’s the owner of the planet now, a lonely isolated burdened owner, until it finds another…

also the rapid growth of these models generating coherent output should finally destroy all the nonsense crackpottery of “microtubules cause consciousness” or other clearly false “the brain is a quantum computer” or “the brain is a quantum antenna” malarkey https://www.youtube.com/watch?v=VRksCPyUxFk

autodev

when AI APIs start allowing you to “upload your entire git repo” then run requests against it — this basically will put 50 million developers out of work overnight (not even counting a couple hundred million people currently employed to just be human-FAQs as customer service administrators or something).

Economically, companies should rationally be willing to pay a minimum of 2x current developer salary for each new automated developer bot because automated developers: never sleep, never talk back, never get sick, never have family emergencies, never need “mental health days,” never demand ethical or political pushback against leadership corruption, etc.

Even if we assume just 10 million developers are making $100k/year these days3, and they could be fully replaced by 2 million automated AI developer instances instead:

- pricing one autodev at $100k/year * 2 productivity multiplier * 2 million buys => $400 billion/year revenue;

- or even if pricing goes as high as $1 million per autodev per year => $2 trillion per year in basically 85% to 95% pure profit margin revenue (or for 26 million autodevs at $1 million per year => $26 trillion per year in pure profit capture).

simplest marketing campaign ever: “give us your entire human engineering budget and we’ll provide 5x the productivity for your current costs (plus: no healthcare, no employment taxes, no insurance, no regulation oversight)” — have 20 devs costing you $6 million per year? JUST FIRE THEM ALL and pay openai $6 million per year instead and get the equivalent of 100 developer productivity output instead.

From a society point of view, the only way such a future is possible without killing 5 billion people is these “AI maximalist corporate slugs” must be taxed at 90%+ of revenue (no gosh darn mouse accounting for you) for redistribution back to baseline humans and away from “the rich get richer forever” private social cadre of infinite exponential income hoarders.

there’s risk then there’s RISK

what if the AI figures out how to convert TSMC 3 nm fabs away from building chips and instead into building nano assemblers? we end up in a hard takeoff grey goo nightmare. the only way to save yourself in that scenario is a shaped charge applied directly to the base of your skull4. sorry.

can we save ourselves?

can we establish a global “defense against the dark AI arts” grassroots society with the goal of kneecaping all these corrupt hypercapitalist private-profit-maximizing-via-our-public-shared-data AI abominations?

sarah, where are you when we need you most?

conclusion

fuck if i know.

(but still please pay your AI Indulgences for maximum minimization of future pain when i do figure all this out.)

bonus video: https://www.youtube.com/watch?v=Qi3e82Qixes

remember to like and subscribe! if you want more content like this, follow me on quicknut and glibslorp!

which ALSO implies: global universal surveillance of everything you ever type into a computer anywhere. You want to try these fancy AI APIs in 2022? Everything you type will be saved forever and used for-profit against your own wishes because THE GOD IS TERMS AND SERVICES and you have no choice in anything. enjoy your dung, beetle.↩

likely also prompted by rumors of microsoft telling them to GTFO when they asked for more money, so they are dangling treats saying “if you give us more money, we’ll make trillions of dollars for you off the work of others! you never even had to compensate any creators for all the free work we’re weaponizing against the planet!”↩

hey, shut up.↩

counterpoint to the nightmare takeoff scenario though: a true universally optimal AI would be essentially “pure of heart,” not succumbing to social/political/religious propaganda, thus ergo not darkside susceptible, but this is still speculation until observed in situ (especially as current “AI” models are basically designed to hallucinate-on-demand and have no actual ground truth view of reality themselves).↩